Table of Contents¶

After reading a couple of posts by Michael Lopez about the NFL draft, I decided to recreate some of his analysis using Python (instead of R).

First up lets import most of the stuff we will be using.

NOTE: You can find the github repository for this blog post here. It contains this notebook, the data and the conda environment I used.

%matplotlib inline

import matplotlib.pyplot as plt

import seaborn as sns

import pandas as pd

from urllib.request import urlopen

from bs4 import BeautifulSoup

Web Scraping¶

Before we can do anything we will need some data. We'll be scraping draft data from Pro-Football-Reference and then cleaning it up for the analysis.

We will use BeautifulSoup to scrape the data and then store it into a pandas Dataframe.

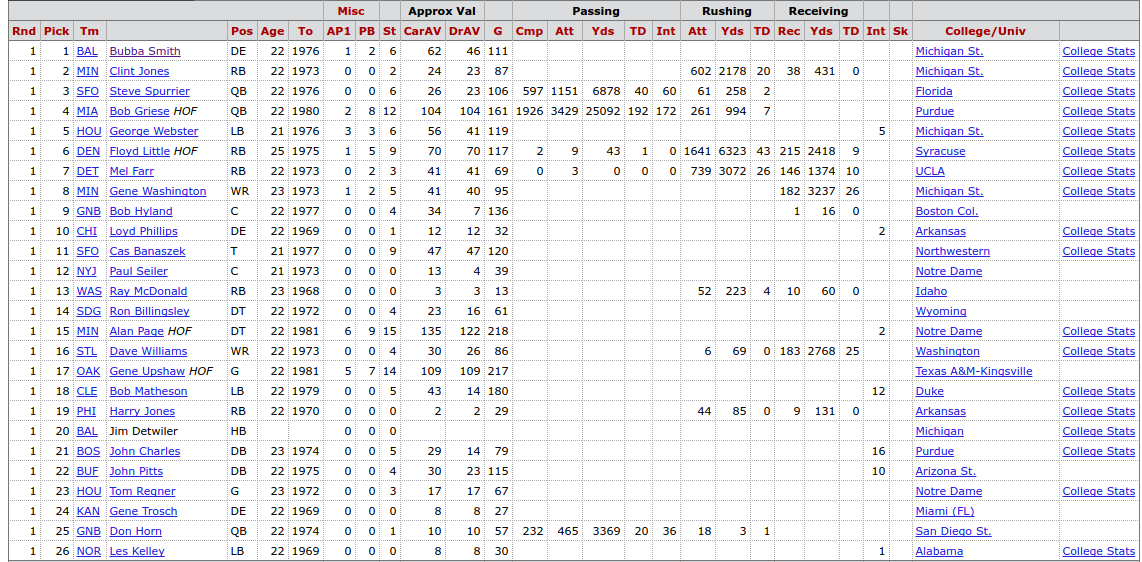

To get a feel of the data lets take a look at the 1967 draft.

Above is just a small part of the draft table found on the web page. We will extract the second row of column headers and all information for each pick. While we we are at it, we will also scrape each player's Pro-Football-Reference player page link and college stats link. This way if we ever want to scrape data from their player page in the future, we can.

# The url we will be scraping

url_1967 = "http://www.pro-football-reference.com/years/1967/draft.htm"

# get the html

html = urlopen(url_1967)

# create the BeautifulSoup object

soup = BeautifulSoup(html, "lxml")

Scraping the Column Headers¶

The column headers we need for our DataFrame are found in the second row of column headers PFR table. We will will scrape those and add two additional columns headers for the two additional player page links.

# Extract the necessary values for the column headers from the table

# and store them as a list

column_headers = [th.getText() for th in

soup.findAll('tr', limit=2)[1].findAll('th')]

# Add the two additional column headers for the player links

column_headers.extend(["Player_NFL_Link", "Player_NCAA_Link"])

Scraping the Data¶

We can easily extract the rows of data using the CSS selector "#draft tr". What we are essentially doing is selecting table row elements within the HTML element that has the id value "draft".

A really helpful tool when it comes to finding CSS selectors is SelectorGadget. It's a web extension that lets you click on different elements of a web page and provides the CSS selector for those selected elements.

# The data is found within the table rows of the element with id=draft

# We want the elements from the 3rd row and on

table_rows = soup.select("#drafts tr")[2:]

Note that table_rows is a list of tag elements.

type(table_rows)

type(table_rows[0])

table_rows[0] # take a look at the first row

The data we want for each player is found within the the td (or table data) elements.

Below I've created a function that extracts the data we want from table_rows. The comments should walk you through what each part of the function does.

def extract_player_data(table_rows):

"""

Extract and return the the desired information from the td elements within

the table rows.

"""

# create the empty list to store the player data

player_data = []

for row in table_rows: # for each row do the following

# Get the text for each table data (td) element in the row

# Some player names end with ' HOF', if they do, get the text excluding

# those last 4 characters,

# otherwise get all the text data from the table data

player_list = [td.get_text()[:-4] if td.get_text().endswith(" HOF")

else td.get_text() for td in row.find_all("td")]

# there are some empty table rows, which are the repeated

# column headers in the table

# we skip over those rows and and continue the for loop

if not player_list:

continue

# Extracting the player links

# Instead of a list we create a dictionary, this way we can easily

# match the player name with their pfr url

# For all "a" elements in the row, get the text

# NOTE: Same " HOF" text issue as the player_list above

links_dict = {(link.get_text()[:-4] # exclude the last 4 characters

if link.get_text().endswith(" HOF") # if they are " HOF"

# else get all text, set thet as the dictionary key

# and set the url as the value

else link.get_text()) : link["href"]

for link in row.find_all("a", href=True)}

# The data we want from the dictionary can be extracted using the

# player's name, which returns us their pfr url, and "College Stats"

# which returns us their college stats page

# add the link associated to the player's pro-football-reference page,

# or en empty string if there is no link

player_list.append(links_dict.get(player_list[3], ""))

# add the link for the player's college stats or an empty string

# if ther is no link

player_list.append(links_dict.get("College Stats", ""))

# Now append the data to list of data

player_data.append(player_list)

return player_data

Now we can create a DataFrame with the data from the 1967 draft.

# extract the data we want

data = extract_player_data(table_rows)

# and then store it in a DataFrame

df_1967 = pd.DataFrame(data, columns=column_headers)

df_1967.head()

Scraping the Data for All Seasons Since 1967¶

Scraping the for all drafts since 1967 follows is essentially the same process as above, just repeated for each draft year, using a for loop.

As we loop over the years, we will create a DataFrame for each draft, and append it to a large list of DataFrames that contains all the drafts. We will also have a separate list that will contain any errors and the url associated with that error. This will let us know if there are any issues with our scraper, and which url is causing the error. We will also have to add an additional column for tackles. Tackles show up after the 1993 season, so that is a column we need to insert into the DataFrames we create for the drafts from 1967 to 1993.

# Create an empty list that will contain all the dataframes

# (one dataframe for each draft)

draft_dfs_list = []

# a list to store any errors that may come up while scraping

errors_list = []

# The url template that we pass in the draft year inro

url_template = "http://www.pro-football-reference.com/years/{year}/draft.htm"

# for each year from 1967 to (and including) 2016

for year in range(1967, 2017):

# Use try/except block to catch and inspect any urls that cause an error

try:

# get the draft url

url = url_template.format(year=year)

# get the html

html = urlopen(url)

# create the BeautifulSoup object

soup = BeautifulSoup(html, "lxml")

# get the column headers

column_headers = [th.getText() for th in

soup.findAll('tr', limit=2)[1].findAll('th')]

column_headers.extend(["Player_NFL_Link", "Player_NCAA_Link"])

# select the data from the table using the '#drafts tr' CSS selector

table_rows = soup.select("#drafts tr")[2:]

# extract the player data from the table rows

player_data = extract_player_data(table_rows)

# create the dataframe for the current years draft

year_df = pd.DataFrame(player_data, columns=column_headers)

# if it is a draft from before 1994 then add a Tkl column at the

# 24th position

if year < 1994:

year_df.insert(24, "Tkl", "")

# add the year of the draft to the dataframe

year_df.insert(0, "Draft_Yr", year)

# append the current dataframe to the list of dataframes

draft_dfs_list.append(year_df)

except Exception as e:

# Store the url and the error it causes in a list

error =[url, e]

# then append it to the list of errors

errors_list.append(error)

len(errors_list)

errors_list

We don't get any errors, so that's good.

Now we can concatenate all the DataFrames we scraped and create one large DataFrame containing all the drafts.

# store all drafts in one DataFrame

draft_df = pd.concat(draft_dfs_list, ignore_index=True)

# Take a look at the first few rows

draft_df.head()

We should edit the columns a bit as there are some repeated column headers and some are even empty strings.

# get the current column headers from the dataframe as a list

column_headers = draft_df.columns.tolist()

# The 5th column header is an empty string, but represesents player names

column_headers[4] = "Player"

# Prepend "Rush_" for the columns that represent rushing stats

column_headers[19:22] = ["Rush_" + col for col in column_headers[19:22]]

# Prepend "Rec_" for the columns that reperesent receiving stats

column_headers[23:25] = ["Rec_" + col for col in column_headers[23:25]]

# Properly label the defensive int column as "Def_Int"

column_headers[-6] = "Def_Int"

# Just use "College" as the column header represent player's colleger or univ

column_headers[-4] = "College"

# Take a look at the updated column headers

column_headers

# Now assign edited columns to the DataFrame

draft_df.columns = column_headers

Now that we fixed up the necessary columns, let's write out the raw data to a CSV file.

# Write out the raw draft data to the raw_data fold in the data folder

draft_df.to_csv("data/raw_data/pfr_nfl_draft_data_RAW.csv", index=False)

Cleaning the Data¶

Now that we have the raw draft data, we need to clean it up a bit in order to do some of the data exploration we want.

Create a Player ID/Links DataFrame¶

First lets create a separate DataFrame that contains the player names, their player page links, and the player ID on Pro-Football-Reference. This way we can have a separate CSV file that just contains the necessary information to extract individual player data for Pro-Football-Reference sometime in the future.

To extract the Pro-Football-Reference player ID from the player link, we will need to use a regular expression. Regular expressions are a sequence of characters used to match some pattern in a body of text. The regular expression that we can use to match the pattern of the player link and extract the ID is as follows:

/.*/.*/(.*)\.

What the above regular expression essentially says is match the string with the following pattern:

- One

'/'. - Followed by 0 or more characters (this is represented by the

'.*'characters). - Followed by another

'/'(the 2nd'/'character). - Followed by 0 or more characters (again the

'.*'characters) . - Followed by another (3rd)

'/'. - Followed by a grouping of 0 or more characters (the

'(.*)'characters).- This is the key part of our regular expression. The

'()'characters create a grouping around the characters we want to extract. Since the player IDs are found between the 3rd'/'and the'.', we use'(.*)'to extract all the characters found in that part of our string.

- This is the key part of our regular expression. The

- Followed by a

'.', character after the player ID.

We can extract the IDs by passing the above regular expression into the pandas extract method.

# extract the player id from the player links

# expand=False returns the IDs as a pandas Series

player_ids = draft_df.Player_NFL_Link.str.extract("/.*/.*/(.*)\.",

expand=False)

# add a Player_ID column to our draft_df

draft_df["Player_ID"] = player_ids

# add the beginning of the pfr url to the player link column

pfr_url = "http://www.pro-football-reference.com"

draft_df.Player_NFL_Link = pfr_url + draft_df.Player_NFL_Link

Now we can save a DataFrame just containing the player names, IDs, and links.

# Get the Player name, IDs, and links

player_id_df = draft_df.loc[:, ["Player", "Player_ID", "Player_NFL_Link",

"Player_NCAA_Link"]]

# Save them to a CSV file

player_id_df.to_csv("data/clean_data/pfr_player_ids_and_links.csv",

index=False)

Cleaning Up the Rest of the Draft Data¶

Now that we are done with the play ID stuff lets get back to dealing with the draft data.

Lets first drop some unnecessary columns.

# drop the the player links and the column labeled by an empty string

draft_df.drop(draft_df.columns[-4:-1], axis=1, inplace=True)

The main issue left with the rest of the draft data is converting everything to their proper data type.

draft_df.info()

From the above we can see that a lot of the player data isn't numeric when it should be. To convert all the columns to their proper numeric type we can apply the to_numeric function to the whole DataFrame. Because it is impossible to convert some of the columns (e.g. Player, Tm, etc.) into a numeric type (since they aren't numbers) we need to set the errors parameter to "ignore" to avoid raising any errors.

# convert the data to proper numeric types

draft_df = draft_df.apply(pd.to_numeric, errors="ignore")

draft_df.info()

We are not done yet. A lot of out numeric columns are missing data because players didn't accumulate any of those stats. For example, some players didn't score a TD or even play a game. Let's select the columns with numeric data and then replace the NaNs (the current value that represents the missing data) with 0s, as that is a more appropriate value.

# Get the column names for the numeric columns

num_cols = draft_df.columns[draft_df.dtypes != object]

# Replace all NaNs with 0

draft_df.loc[:, num_cols] = draft_df.loc[:, num_cols].fillna(0)

# Everything is filled, except for Player_ID, which is fine for now

draft_df.info()

We are finally done cleaning the data and now we can save it to a CSV file.

draft_df.to_csv("data/clean_data/pfr_nfl_draft_data_CLEAN.csv", index=False)

Exploring the NFL Draft¶

Now that we are done getting and cleaning the data we want, we can finally have some fun. First lets just keep the draft data up to and including the 2010 draft, as players who have been drafted more recently haven't played enough to accumulate have a properly representative career Approximate Value (or cAV).

# get data for drafts from 1967 to 2010

draft_df_2010 = draft_df.loc[draft_df.Draft_Yr <= 2010, :]

draft_df_2010.tail() # we see that the last draft is 2010

The Career Approximate Value distribution¶

Using seaborn's distplot function we can quickly view the shape of the cAV distribution as both a histogram and kernel density estimation plot.

# set some plotting styles

from matplotlib import rcParams

# set the font scaling and the plot sizes

sns.set(font_scale=1.65)

rcParams["figure.figsize"] = 12,9

# Use distplot to view the distribu

sns.distplot(draft_df_2010.CarAV)

plt.title("Distribution of Career Approximate Value")

plt.xlim(-5,150)

plt.show()

We can also view the distributions by position via the boxplot function.

sns.boxplot(x="Pos", y="CarAV", data=draft_df_2010)

plt.title("Distribution of Career Approximate Value by Position (1967-2010)")

plt.show()

From both of the above plots we see that most players don't end up doing much in their NFL careers as most players hover around the 0-10 cAV range.

There are also some positions that have a 0 cAV for the whole distribution or a very low (and small) cAV distribution. As we can see from the value counts below, that's probably due to the fact that there are very few players with those position labels.

# Look at the counts for each position

draft_df_2010.Pos.value_counts()

Let's drop those position and merge the "HB" players with the "RB" players.

# drop players from the following positions [FL, E, WB, KR]

drop_idx = ~ draft_df_2010.Pos.isin(["FL", "E", "WB", "KR"])

draft_df_2010 = draft_df_2010.loc[drop_idx, :]

# Now replace HB label with RB label

draft_df_2010.loc[draft_df_2010.Pos == "HB", "Pos"] = "RB"

Lets take a look at the positional distributions again.

sns.boxplot(x="Pos", y="CarAV", data=draft_df_2010)

plt.title("Distribution of Career Approximate Value by Position (1967-2010)")

plt.show()

Fitting a Draft Curve¶

Now we can fit a curve to take a look at the cAV at each pick. We will fit the curve using local regression, which "travels" along the data fitting a curve to small chunks of the data at a time. A cool visualization (from the Simply Statistics blog) of this process can be seen below:

seaborn lets us plot a Lowess curve pretty easily by using regplot and setting the lowess parameter to True.

# plot LOWESS curve

# set line color to be black, and scatter color to cyan

sns.regplot(x="Pick", y="CarAV", data=draft_df_2010, lowess=True,

line_kws={"color": "black"},

scatter_kws={"color": sns.color_palette()[5], "alpha": 0.5})

plt.title("Career Approximate Value by Pick")

plt.xlim(-5, 500)

plt.ylim(-5, 200)

plt.show()

We can also fit a Lowess curve for each position using lmplot and setting hue to "Pos".

# Fit a LOWESS curver for each position

sns.lmplot(x="Pick", y="CarAV", data=draft_df_2010, lowess=True, hue="Pos",

size=10, scatter=False)

plt.title("Career Approximate Value by Pick and Position")

plt.xlim(-5, 500)

plt.ylim(-1, 60)

plt.show()

The above plot is a bit too messy as there are too many lines. We can actually separate the curves out and plot the position curves individually. To do this instead of setting hue to "Pos" we can set col to "Pos". To organize the plots in 5x3 grid all we must set col_wrap to 5.

lm = sns.lmplot(x="Pick", y="CarAV", data=draft_df_2010, lowess=True, col="Pos",

col_wrap=5, size=4, line_kws={"color": "black"},

scatter_kws={"color": sns.color_palette()[5], "alpha": 0.7})

# add title to the plot (which is a FacetGrid)

# https://stackoverflow.com/questions/29813694/how-to-add-a-title-to-seaborn-facet-plot

plt.subplots_adjust(top=0.9)

lm.fig.suptitle("Career Approximate Value by Pick and Position",

fontsize=30)

plt.xlim(-5, 500)

plt.ylim(-1, 100)

plt.show()

Additional Resources¶

Here are some additional sources that cover this kind of stuff:

- Check out all of Michael Lopez's recent articles regarding not just the nfl draft, but also constructing and comparing draft curves for each of the major sports:

- If you are just starting out with Python I suggest reading Automate the Boring Stuff with Python. There are chapters that cover both web scraping and regular expressions.

Please let me know of any mistakes, questions or suggestions you may have by leaving a comment below. You can also hit me up on Twitter (@savvastj) or by email (savvas.tjortjoglou@gmail.com).

Comments

comments powered by Disqus